This exercise can be done on any version of Postgres, and doesn’t require any other data – just runs by itself.

Let’s start by creating a table with an index:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

DROP TABLE IF EXISTS recipes; CREATE TABLE recipes ( recipe_id SERIAL PRIMARY KEY, cuisine VARCHAR(100) NOT NULL, recipe_name VARCHAR(100) NOT NULL, favorited_by_users INTEGER default 0, views INTEGER default 0, last_updated_date TIMESTAMPTZ DEFAULT CURRENT_TIMESTAMP ); CREATE INDEX recipes_cuisine_recipe_name on recipes(cuisine, recipe_name); CREATE INDEX recipes_favorited_by_users on recipes(favorited_by_users); CREATE INDEX recipes_last_updated_date ON recipes(last_updated_date); |

Load it with a million rows:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 |

WITH adjectives AS ( SELECT UNNEST(ARRAY[ 'Delicious', 'Spicy', 'Savory', 'Sweet', 'Crispy', 'Tangy', 'Zesty', 'Hearty', 'Rich', 'Light', 'Creamy', 'Buttery', 'Crunchy', 'Juicy', 'Tender', 'Fluffy', 'Gooey', 'Mouthwatering', 'Decadent', 'Luscious', 'Aromatic', 'Smoky', 'Nutty', 'Buttery', 'Golden', 'Succulent', 'Velvety', 'Satisfying', 'Refreshing', 'Piquant', 'Fiery', 'Robust', 'Delicate', 'Vibrant', 'Earthy', 'Citrusy', 'Bold', 'Herbaceous', 'Tart', 'Peppery', 'Fragrant', 'Mellow', 'Fruity', 'Exquisite', 'Delectable', 'Rich', 'Sharp', 'Zingy', 'Heavenly', 'Appetizing', 'Smooth', 'Chewy', 'Moist', 'Silky', 'Bitter', 'Savory', 'Salty', 'Charred', 'Smoky', 'Caramelized', 'Mild', 'Spongy', 'Toasty', 'Ripe', 'Overripe', 'Underripe', 'Fresh', 'Zippy', 'Wholesome', 'Tempting', 'Spiced', 'Pungent', 'Delicate', 'Complex', 'Balanced', 'Sticky', 'Creamy', 'Warm', 'Cool', 'Cold', 'Bubbly', 'Crisp', 'Lively', 'Grassy', 'Juicy', 'Frosty', 'Glazed', 'Stuffed', 'Marinated', 'Infused', 'Poached', 'Braised', 'Pickled', 'Tossed', 'Sauteed', 'Roasted', 'Grilled', 'Barbecued', 'Seared', 'Simmered', 'Boiled' ]) AS adjective ), ingredients AS ( SELECT UNNEST(ARRAY[ 'Chicken', 'Turkey', 'Duck', 'Quail', 'Goose', 'Pheasant', 'Guinea Fowl', 'Cornish Hen', 'Rooster', 'Emu', 'Beef', 'Veal', 'Ground Beef', 'Steak', 'Ribs', 'Brisket', 'Oxtail', 'Tri-Tip', 'Beef Jerky', 'Carne Asada', 'Pork', 'Bacon', 'Ham', 'Sausage', 'Prosciutto', 'Pancetta', 'Pork Loin', 'Pork Shoulder', 'Pork Belly', 'Ground Pork', 'Salmon', 'Tuna', 'Cod', 'Haddock', 'Mackerel', 'Trout', 'Halibut', 'Sea Bass', 'Swordfish', 'Snapper', 'Shrimp', 'Lobster', 'Crab', 'Scallop', 'Clam', 'Oyster', 'Mussel', 'Squid', 'Octopus', 'Anchovy', 'Carrot', 'Broccoli', 'Cauliflower', 'Asparagus', 'Zucchini', 'Eggplant', 'Spinach', 'Kale', 'Bell Pepper', 'Mushroom', 'Chickpea', 'Lentil', 'Black Bean', 'Kidney Bean', 'Quinoa', 'Rice', 'Barley', 'Oat', 'Corn', 'Farro', 'Cheddar', 'Mozzarella', 'Parmesan', 'Feta', 'Yogurt', 'Butter', 'Cream', 'Milk', 'Ricotta', 'Cream Cheese', 'Almond', 'Walnut', 'Pecan', 'Cashew', 'Pine Nut', 'Chia Seed', 'Flax Seed', 'Pumpkin Seed', 'Sunflower Seed', 'Hazelnut', 'Tofu', 'Tempeh', 'Seitan', 'Egg', 'Coconut Milk', 'Honey', 'Maple Syrup', 'Soy Sauce', 'Miso', 'Tahini' ]) AS ingredient ), dishes AS ( SELECT UNNEST(ARRAY[ 'Casserole', 'Salad', 'Wrap', 'Soup', 'Stir-Fry', 'Sandwich', 'Pizza', 'Stew', 'Pasta', 'Skewers', 'Tacos', 'Burger', 'Sushi', 'Paella', 'Ravioli', 'Risotto', 'Gumbo', 'Chowder', 'Gratin', 'Quiche', 'Frittata', 'Lasagna', 'Tart', 'Pot Pie', 'Ramen', 'Pho', 'Burrito', 'Curry', 'Dim Sum', 'Dumplings', 'Gnocchi', 'Hotpot', 'Pancakes', 'Waffles', 'Crepes', 'Brownies', 'Muffins', 'Cupcakes', 'Meatloaf', 'Kebab', 'Samosa', 'Spring Roll', 'Egg Roll', 'Fajitas', 'Poke Bowl', 'Bibimbap', 'Ceviche', 'Chili', 'Nachos', 'Stuffed Peppers', 'Enchiladas', 'Quesadilla', 'Empanadas', 'Shakshuka', 'Tagine', 'Galette', 'Bruschetta', 'Tapas', 'Croquettes', 'Blini', 'Pierogi', 'Arepas', 'Tamales', 'Bao Buns', 'Roti', 'Naan Pizza', 'Jambalaya', 'Shepherd’s Pie', 'Cottage Pie', 'Tikka Masala', 'Teriyaki Bowl', 'Bento Box', 'Flatbread', 'Calzone', 'Piroshki', 'Eclairs', 'Soufflé', 'Tostadas', 'Cannelloni', 'Ziti', 'Manicotti', 'Hash Browns', 'Grilled Cheese', 'Panini', 'Charcuterie Board', 'Focaccia', 'Bagel Sandwich', 'Gyro', 'Pad Thai', 'Biryani', 'Satay', 'Churros', 'Fondue', 'Rice Bowl', 'Omelette', 'Pavlova', 'Trifle', 'Parfait', 'Timbale', 'Clafoutis' ]) AS dish ), cuisines AS ( SELECT UNNEST(ARRAY[ 'Italian', 'Mexican', 'Chinese', 'Indian', 'American', 'Japanese', 'Mediterranean', 'French', 'Thai', 'Korean', 'Cuban', 'Cajun', 'Ethiopian', 'Vietnamese', 'Spanish', 'Turkish', 'Moroccan', 'Greek', 'Brazilian', 'Caribbean', 'Russian', 'Polish', 'German', 'Lebanese', 'Persian', 'Afghan', 'Pakistani', 'Sri Lankan', 'Filipino', 'Indonesian', 'Malaysian', 'Singaporean', 'Argentinian', 'Chilean', 'Colombian', 'Peruvian', 'Venezuelan', 'Haitian', 'Jamaican', 'Hawaiian', 'South African', 'Nigerian', 'Ghanaian', 'Kenyan', 'Tanzanian', 'Ugandan', 'Eritrean', 'Somali', 'Sudanese', 'Zimbabwean', 'Irish', 'Scottish', 'Welsh', 'English', 'Belgian', 'Swiss', 'Austrian', 'Swedish', 'Norwegian', 'Finnish', 'Danish', 'Icelandic', 'Australian', 'New Zealand', 'Egyptian', 'Algerian', 'Tunisian', 'Libyan', 'Saudi', 'Emirati', 'Jordanian', 'Syrian', 'Iraqi', 'Yemeni', 'Georgian', 'Armenian', 'Azerbaijani', 'Uzbek', 'Kazakh', 'Mongolian', 'Tibetan', 'Nepalese', 'Bhutanese', 'Bangladeshi', 'Burmese', 'Laotian', 'Cambodian', 'Taiwanese', 'Hunan', 'Szechuan', 'Cantonese', 'Shanghainese', 'Puerto Rican', 'Guatemalan', 'Costa Rican', 'Salvadoran', 'Nicaraguan', 'Honduran', 'Panamanian', 'Bolivian' ]) AS cuisine ) INSERT INTO recipes (cuisine, recipe_name, favorited_by_users, last_updated_date) SELECT cuisines.cuisine, adjectives.adjective || ' ' || ingredients.ingredient || ' ' || dishes.dish AS recipe_name, floor(random() * 1000) AS favorited_by_users, NOW() - (random() * interval '1 year') AS last_updated_date FROM adjectives CROSS JOIN ingredients CROSS JOIN dishes CROSS JOIN cuisines order by RANDOM() LIMIT 1000000; |

Take a look at the data we loaded:

Vacuum the table to make sure it’s nice and compact, then check the size of the index on last_updated_date:

|

1 2 3 |

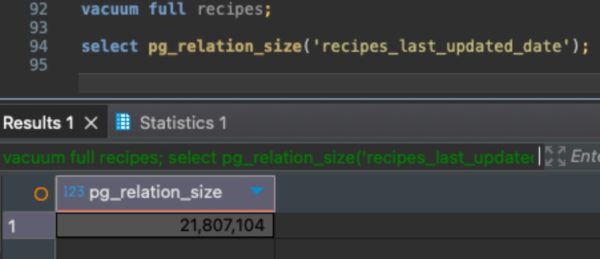

vacuum full recipes; select pg_relation_size('recipes_last_updated_date'); |

It’s about 22MB:

Now update about 1/4 of the rows:

|

1 2 3 4 |

update recipes set cuisine = 'Jeju', last_updated_date = NOW() where recipe_id % 4 = 0; |

Vacuum the table again, and check the size of the index:

|

1 2 3 |

vacuum full recipes; select pg_relation_size('recipes_last_updated_date'); |

Wait – the new size is – smaller?

And that’s your challenge: figure out why the index’s size dropped in size after we updated about 25% of the rows. Good luck, have fun, leave your answer in the comments, and we’ll circle back next week with the explanation.

9 Comments. Leave new

I suppose that since it’s a B-tree index type, there are less nodes now because we updated 25% of the rows to have the same value for last_updated_date column

Yep, good work!

All the duplicate values for the date are basically one entry as it defaults to a b-tree.

There you go! Good work!

Brent, what tool do you use to query? I have been using azure data studio… but they are getting rid of this great product and sending people to vscode… which… they tried… but the postgres extensions seem to be a headache.. chatgpt at least got me here and told me you have mentioned using postico.. I’m on windows. What do you recommend?

Thanks!

DBeaver.

Hi Brent: You mentioned DBeaver. Are you getting what you need from the free version or are you paying for a more feature-rich edition of the tool? Thank you!

Great question! I tend to just teach with free tools to keep the audience costs down where I can, and reach more readers.

Thank you Brent for taking time to reply.